Let us say you have a list of objects that contain Person information like First Name, Last Name, Phone Number, Date of Birth and Address. It is required to transform each object into a new object which contains a Name field, Phone Number, Date of Birth and Address. Where the Name field is the full name of the person, and the rest of the fields are a copy of the original objects corresponding field values.

If we attempt to write the solution in Java, the function to perform the conversion for each Person object will look something like:

public PersonEx convert(Person person) {

return personEx;

Now, the equivalent code in ECL is :

PersonEx convert(Person person) := TRANSFORM

The ECL code looks much simpler but achieves the same objective. Let me explain. ECL automates programming steps as much as possible based on the information that is available to the compiler. In the above example, ECL knows that the return value is a record of type "PersonEx". Hence, the keyword "SELF" is equivalent to "PersonEx self = new PersonEx();" in Java. The instance creation and association is implicit. This eliminates the need to type in all the extra code that greatly simplifies your programming task.

There is some more implicit magic here. What does the statement "SELF := person;" do? You guessed right. It is equivalent to explicitly writing the following code:

SELF.phoneNumber := person.phoneNumber;

SELF.address := person.address;

SELF.dateOfBirth := person.dateOfBirth;

ECL, by introspection, compares the two objects and automatically initializes the variables that have the same names that have not been previously initialized.

To summarize:

ECL, provides us many more features to make our programming lives easier. The following are some examples:

PROJECT(persons, convert(LEFT));

The "LEFT" indicates a reference to a record in "persons". The "project" declares that for every record in the data set "persons", perform the "convert" transformation. Implementing something similar in java would need an iterator, loop and several variable initialization steps.

OUTPUT(persons(firstName = 'Jason'));

This action returns a result set "persons" after applying the filter "firstName='Jason'".

As you can seen in the above examples, ECL has been designed to be simple and practical. It enables data programmers to quickly implement their thoughts into programming tasks by keeping the syntax simple and minimal. Java is used in the examples to show you how ECL's contracting style can be used effectively to solve data processing problems. It does not mean that Java is not a practical language. It simply means that ECL is abstract enough to shield the programmer from complex language structures as in Java.

If we attempt to write the solution in Java, the function to perform the conversion for each Person object will look something like:

public PersonEx convert(Person person) {

PersonEx personEx = new PersonEx(); personEx.name = person.lastName + " " + person.firstName; personEx.phoneNumber = person.phoneNumber; personEx.address = person.address; personEx.dateOfBirth = person.dateOfBirth; return personEx;

}Now, the equivalent code in ECL is :

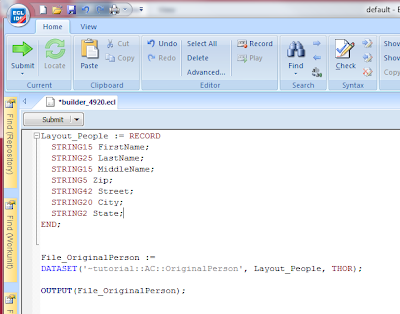

PersonEx convert(Person person) := TRANSFORM

SELF.name := person.firstName + ' ' + person.lastName; SELF := person; end;

The ECL code looks much simpler but achieves the same objective. Let me explain. ECL automates programming steps as much as possible based on the information that is available to the compiler. In the above example, ECL knows that the return value is a record of type "PersonEx". Hence, the keyword "SELF" is equivalent to "PersonEx self = new PersonEx();" in Java. The instance creation and association is implicit. This eliminates the need to type in all the extra code that greatly simplifies your programming task.

There is some more implicit magic here. What does the statement "SELF := person;" do? You guessed right. It is equivalent to explicitly writing the following code:

SELF.phoneNumber := person.phoneNumber;

SELF.address := person.address;

SELF.dateOfBirth := person.dateOfBirth;

ECL, by introspection, compares the two objects and automatically initializes the variables that have the same names that have not been previously initialized.

To summarize:

ECL, provides us many more features to make our programming lives easier. The following are some examples:

PROJECT(persons, convert(LEFT));

The "LEFT" indicates a reference to a record in "persons". The "project" declares that for every record in the data set "persons", perform the "convert" transformation. Implementing something similar in java would need an iterator, loop and several variable initialization steps.

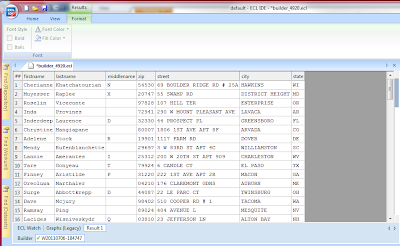

OUTPUT(persons(firstName = 'Jason'));

This action returns a result set "persons" after applying the filter "firstName='Jason'".

As you can seen in the above examples, ECL has been designed to be simple and practical. It enables data programmers to quickly implement their thoughts into programming tasks by keeping the syntax simple and minimal. Java is used in the examples to show you how ECL's contracting style can be used effectively to solve data processing problems. It does not mean that Java is not a practical language. It simply means that ECL is abstract enough to shield the programmer from complex language structures as in Java.